When is a feature considered "Done"?

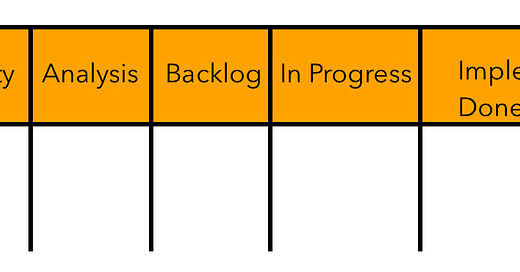

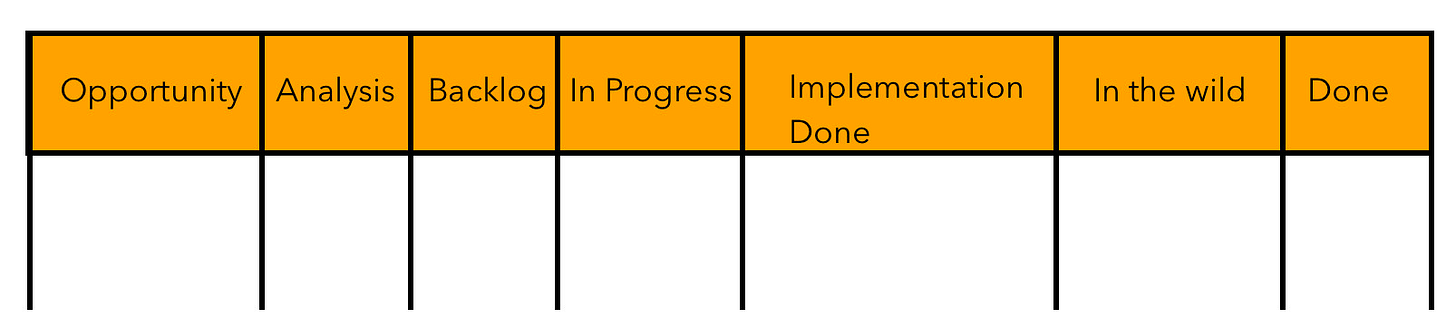

At TeamViewer, we maintain a Portfolio Kanban board for every product. This board serves as a transparent medium to keep the stakeholders and the rest of the organization aware of the status of various projects running in the organization. It helps us in managing resources, project flow and WIP limits for the teams well. We began maintaining with a physical board but soon moved to a digital format in JIRA because a lot of remote teams that are spread out could not access the physical board.

The broad stages in our Portfolio Kanban board looks like this:

In the Wild

In the Wild is the stage when the feature is shipped for the first time and makes first customer contact.

Done

Done is the stage where a feature is considered a success, its off the hook for observation and no further iterations may be planned.

Done Ritual

However, we realised that we would move many of the features automatically from In the Wild to Done stage just after a release. After a little bit of reflection, we began to be more mindful and deliberate of moving them to the Done column. We started observing an elaborate Done ritual before deciding to move a feature to Done. The Done ritual is performed in front of the stakeholders and other R&D leads and is led by the product owners.

Here is how the ritual looks.

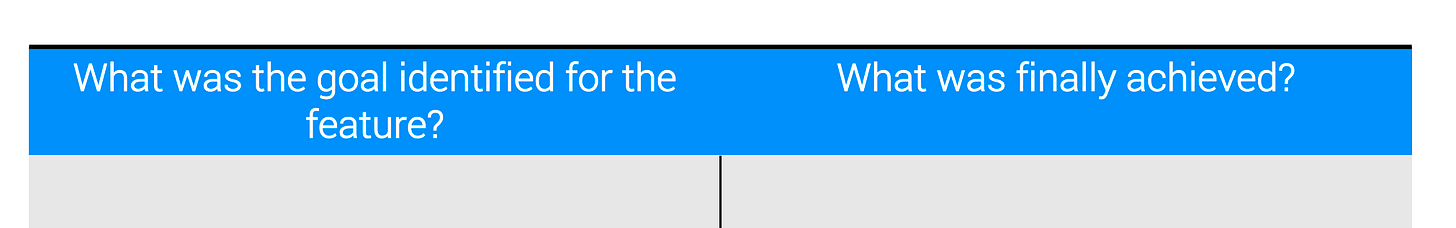

Step 1: Compare and contrast the goals identified for the feature and what was finally achieved?

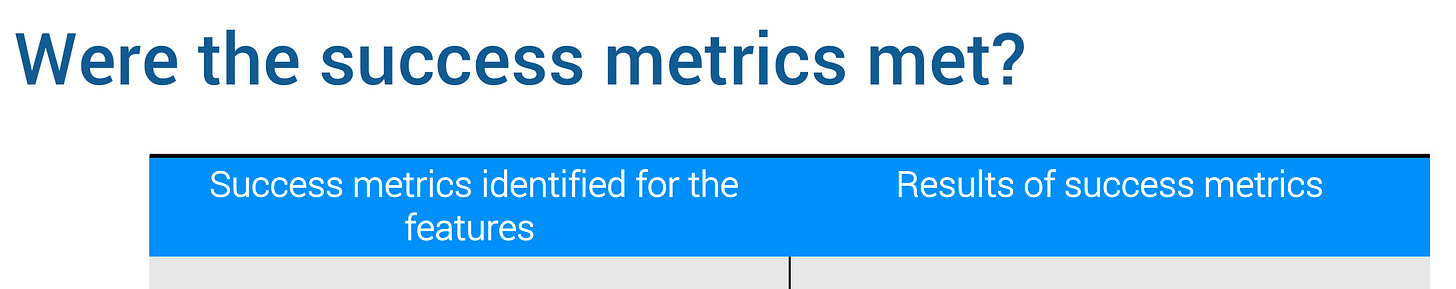

Step 2: Were the success metrics met?

Steps 3: Demonstrate customer validation by sharing real customer quotation#

At this step, we would share real customer quotes who benefited from the feature and received positive outcomes.

Step 4: Decide whether to iterate or pull out?

In the last step depending whether success metrics were met or not, the product owner suggests either to iterate or pull out the feature.

https://productlessonsravi.substack.com/p/how-to-prioritise-feature-set-for-15d

I had an interesting conversation on the topic of iteration after release with Kalyan Nistala, product manager at Netflix.

(Play on the 34th minute to listen to the topic.)

How long should we monitor a feature before we can decide to measure its success and consider it "Done"?

We were plagued by the question of how long should a feature remain in the wild before we can measure its success.

I tried to seek help from my own network of product managers and here is what I heard from many of them.

Answers:

Evgeny Lazarenko, PM, TradeGecko

Rule of thumb: Until it’s daily (or weekly, or monthly) adoption / key success metric regresses to the mean. This will allow easy assessment of its success over time moving forward, without the need to constantly monitor the dashboard.

This also allows you to describe the user behavior and success in terms of averages.

A slightly more complete answer:

It depends on the feature. If I’m building a transient feature or a setting that users use once in a while, I will track until it regresses to the mean.

If I’m building a larger product with multiple moving pieces and I know that my long term goal is to grow the adoption of that product, I will be tracking its overall baseline performance for as long as I own that product.

At the same time, I will be measuring the impact that each individual improvement has on that baseline.

-Gary Teo, Regional Technology Director at VLM SEA

Normally about 1 quarter. We’d give it some runway and disregard some data from the initial weeks (accounting for change turbulence).

-- Kunal Gupta, Product Owner, IG

I track a small feature or enhancement for just a couple of weeks only to check its impact and ascertain that the impact is not negative.

For a major functionality it even becomes part of my monthly KPI which is tracked on an ongoing basis and presented to stakeholders.

- Muthiah Anand Sankar, Founder, Frog Innovation

if the feature is a critical feature, i would track it daily for a month, if i see traction then i would continue to track for upto 3 months.

if anything has to work it will show positive signs in a months time, if not, the feature requires attention, the reason could be anything from bad timing, poor communication, poor UI, technology failure, high pricing ..etc.

A feature which is optional like (Tell a friend kinds) will take more time to mature, i would still track it for a longer time but it wouldn't get priority.

Abhishek Rathore, Head Product Management, Rakuten

Just like most of the questions in the world...the answer to this question will also be it depends...However, my experience working in High traffic Internet products...Generally, 2 weeks of observation has given me stable metrics to confidently conclude on the success/failure of the feature. As people have commented, we have statistical ways to determine this period as well. However, till the time you make some new changes, metrics tend to become stable after 2 weeks especially in eCommerce which is a very transient day to day variation product. 2 weeks usually gave me enough time to accept/reject my initial hypothesis.

For completely new product launches, we use to give it 1 month to measure KPIs set for the product and label it as success/failure.

OVL Kiran Kumar, Managing Partner, OVL Advisor

This completely depends on frequency of usage of the feature (expected vs actual). Adequate data should be collected to determine 3 things:

1) Discoverability -

2) Correlation of usage with KPIs (Positive/Negative and to what degree)

3) Correlation of non usage with KPIs (Positive/Negative and to what degree)

Time to track is a function of collecting adequate data.

In Mobile apps, with daily usage, it can be as short as a week. In enterprise products, it can be as long as 6 months.

All these answers were interesting to me. However, we have still not been able to figure out an accurate way to decide for how long a feature should stay in the wild to measure its success. We try to use our best judgement. If you have better suggestions do let me know in the comments.

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - — - - - - - - - - - -